by The Honorable John McClellan Marshall

“Man is a slow, sloppy, and brilliant thinker; computers are fast, accurate, and stupid.”

John Pfeiffer

Modern technology has had an undeniable impact on a wide range of social institutions. Not the least of these is the legal community and its efforts to seek the truth of a given situation. Once upon a time, indeed for centuries, lawyers were trained to present as “evidence” either oral testimony or physical items that supported their contentions. The 21st Century, however, has confronted attorneys with an entirely new reality. Conventional items that would be introduced into court as “evidence” may or may not be “authentic”. This problem is a direct result of the technology that generates the “evidence”. In order to assist in the process of “finding the truth”, the rules that apply to such items must accommodate this reality.

It is axiomatic that in the context of litigation, the key to success is strategic planning. What enables the plan to work is the evidence that is offered. It is ironic that in this a culture that is dominated by technological innovation the humanities remain somewhat in control of the scientific evidence that might be offered. In many cases the judge simply applies common sense to the decision process, allowing the evidence to be admitted if “it will assist the trier of fact.” That said, an entire body of law, commonly referred to as “the rules of evidence”. These “rules” have grown up over the centuries of trials and define what will “assist the trier of fact” and what will not be allowed. Although there is a tendency to look at these rules as defining what will be “included”, a closer examination reveals that they are in fact rules of “exclusion”. In order for an item of evidence to become part of the record before the court and jury, it must not be subject to an objection based upon one of these rules.

For example, the best known of these rules is the “hearsay” rule. Simply put, it states that a statement that is made out of court, but which is offered in court to assert the truth of the matter contained therein is not to be admitted. Objections based upon the hearsay rule abound, but, as a result of judicial evolution, so have the exceptions to the rule. To date, there are at least nineteen traditional exceptions to the hearsay rule. “Clear”, however, is not as clear as it might seem, since there are exceptions to the exceptions in some cases. To overcome the hearsay objection, one must demonstrate the exception. The other rules of evidence have similar limitations that must be met in order for the evidence to be admitted. When the subject of the evidence is either technology or some field of academic expertise such as medicine, the concept of “technoevidence” arises.

Technoevidence is defined simply as “information that would not be available to the trier of fact (whether judge or jury) no matter how smart the witness, in the absence of modern technology”. The logical, and legal, corollary is that it should be evidence that cannot be deduced by the employment of sheer brain power by a human being. Such evidence ultimately must be deemed to be admissible in court because of its seeming intrinsic “trustworthiness”. That trustworthiness will necessarily be based upon technological considerations. In this circumstance, the precision of the evidence will likely be directly dependent upon the sophistication of the instruments employed to analyze the factual input.

For example, in the case of an automobile accident involving alcohol or drugs, there is generally equipment that is employed to determine whether the legal limitations have been exceeded. In addition to a simple analysis of blood or breath as to the substances involved, there is an “error bar”.[1] Obviously, it would be necessary to insure that the equipment was properly calibrated in order to know whether or not the results are accurate and within the error bar limits. The failure to recognize the need for such regular re-calibration of the machine renders the outcome suspect, if not unusable.[2] Similarly, in a case involving DNA evidence, the result is characteristically expressed as a probability that can range up to 99.975% likelihood. Even there, however, there is an error bar of some level that can impact the result. These variations in the means to generate technoevidence necessarily lead to what might be termed the “Turing limit.”[3]

The “Turing test” is based upon the notion that a machine can be created that will respond in a fashion that will demonstrate a machine’s ability to exhibit intelligent behaviour.[4] In order to pass the “Turing test”, the response must be equivalent to, or indistinguishable from, that of a human. By extension, such a machine would be deemed to exhibit “artificial intelligence”, or AI. At the threshold, there are varying definitions of “intelligence”, so the application of “artificial” to “intelligence” has become problematic.[5] The premise that Turing’s imitation game and test is a valid starting point is itself limited by the very fact that, even in Turing’s construct, the human being knows that he or she is never not communicating with a machine. In the utilization of technology by human researchers that knowledge defines the “Turing limit.” Because the human being never fails to know that, no matter the sophistication of the machine, it is still a machine response.[6] As a result, the evidence thus adduced is always lacking mathematical, and possibly mechanical, precision in its presentation because the machine’s response is circumscribed by a binary world.

To the extent that a machine is required in the process of generating technoevidence, there is a problem of implicit bias. This arises from the reality that modern computing devices characteristically accept inquiries, and respond, in binary fashion. The absence of nuance in the capabilities of the machine adds another element to the “Turing limit.” Perhaps the most recent examples of these limitations involves the facial recognition and voice recognition softwares that have been used in criminal cases. The sheer variety of facial shapes, skin tones, eye coloration, etc. has caused facial recognition to become rather suspect. Similarly, voice recognition software that cannot tell a Welshman from a native of Micronesia has proved to be of little value despite high expectations.

Indeed, a witness who possesses a Ph.D. in economics with a calculator is the very embodiment of the “Turing limit” in court. This is because the testimony actually emanates from a machine that can only answer the question that it is programmed to supply. Anything else, including a hesitation, may be the result of a “fibrillation”. Such “fibrillation”, or random background noise, can arise from simple temperature changes or surges in electrical current in the system that make the answer untrustworthy. Why is that important?

On the one hand, this is important because the increasing societal reliance on machines that may or may not be “fibrillating” when responding may well be misplaced. It is the inability of the human to determine whether, and how severely, the machine may be responding to random noise without satisfactory testing processes and mechanisms. Indeed, it is the gap between “trust” and “verify” that is at the heart of the problem. Certainly the machine can produce the evidence, but the question remains whether or not it is authentic, i.e. “true”. After all, human beings have experience as part of general learning ability, as Anaïs Nin pointed out, “We do not see things as they are. . .we see things as we are.”[7] That reality must be a part of the presentation of what the machine has determined.

It is also important in part because, to borrow the language of quantum mechanics, there is such a thing as “superposition” that allows many “facts” to occupy many locations at the same time, as seen by the eye of the observer. Once they are sorted out and measured they “collapse” into one location. Considering this in the context of a legal case, from the perspective of the lawyer/observer, there are many “facts” that will comprise the “big picture” of a case. At the start, they may occupy many spaces in the strategic plan of the lawyer. As time goes on, however, they will gradually begin to fit into a pattern that, when it is time to go to trial, make up one picture, one location. The very flexibility of the prioritization of the data with the aid of a machine, however, leads to the possibility that there may be no absolute “fact” for an attorney to present in court.[8] The determination of “fact vel non” is not the province of science, though. It is the responsibility of the trier of fact, whether judge or jury, based upon the evidence. As noted by the late Justice Oliver Wendell Holmes, Jr., “The life of the law has not been logic; it has been experience. . . .”[9]

On a social level, the expansion of the scope of the relationship between the human observer and the technology at hand and its consequent dilution of the exercise of human mental faculties may have led to a more subtle and damaging series of phenomena. With the societal emphasis on speed, the pressure to “produce” and to succeed even at the elementary school level, technology presents an unprecedented opportunity for humanity to get lost in the complexities of the machines that facilitate that speed and success.

At the heart of the question of the utility of technoevidence in court is the reality of the function of a court in society. In essence, the court is a system created by human beings to resolve disputes that people cannot solve for themselves. To that extent, it is a very human system and is only valid if it serves human needs. Those needs include the ethical and moral principles that have undergirded human society for millennia. The system, therefore, puts humanity first, and technoevidence is incidental to the process. This injects into the judicial process the concept of cyberethics, and that adds another dimension to the judicial decision-making that is involved. Cyberethics is “the relationship between the ethical and legal systems that have been developed to serve humanity from ancient times to the present as expressed in our philosophical and ethical systems of thought and the judicial process as contrasted with the ability of computer-driven technology to operate outside those conventions with almost no limits”.[10]

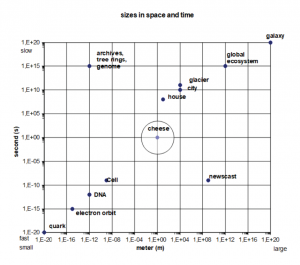

Fig. 1 illustrates the analytical path that the attorney or judge will likely take when considering the utility of technoevidence in a given case. Obviously, this is primarily a litigation concept, but it would have similar utility in a mediation or arbitration context as well.[11]

Fig. 1. The Bait ©Ramon Guardans. Used by permission.[11]

To the lower left, at DNA for example, are matters that cannot be perceived by the senses without technological assistance. This represents the lower end of the technoevidentiary trajectory. As the “flow” moves across the circle toward the upper right-hand corner, the view of the observer becomes steadily broader. The evidentiary picture has evolved in complexity from the microscopic to the visible then to the macro, if not galactic, scale. It is true that much of the evidence in a conventional trial will be confined to the circle. In a car crash, for example, in one case the evidence showed the distinct imprint of the front of a particular sports car in the door of the other car. There was no need of an expert to tell the jury who hit whom. On either side of the circle, though, technology may well be of importance in the presentation of the “facts” that comprise the case at hand. It follows that the farther away from the circle is the source of the information, the greater the need for technology.

The arrival of computer technology wrought a tidal change in this situation by expanding the ability of an individual or a corporate body to store ever increasing amounts of information for an indeterminate period of time. The advent of the Apollo Program and the diversification in the other aspects of space exploration expanded the amount of raw data exponentially in a matter of only a few years.

That pace has not only not slackened, it has increased dramatically during the first two decades of the 21st Century. The very existence of the internet providing access to an enormous body of information that may well now be beyond the ability of the scholar to examine in a meaningful way. That said, that is beside the point, except to note that the internet itself has become another resource to be consulted. Indeed, this has become a major problem that is known by its shorthand designation of “Big Data”. In tandem with the technological impact of computers, it has been noted that “we have gone from an age that was meaning rich but data poor, to one that is data rich but meaning poor. . . [, and] this is an epistemological revolution as fundamental as the Copernican revolution.”[12] Academic research in recent years has been influenced dramatically by the ability of the personal computer to allow the student to search the internet for information, but the question as to the quality of the information remains.

One estimate is that approximately 16.3 zettabytes of information, roughly the equivalent of 16.3 trillion gigabytes, is being produced each year. By 2025, this number should increase ten times.[13] Of course, such a vast quantity of information will contain much that is simply irrelevant to the real world as it appears at the time. The problem is that what may seem irrelevant in the 21st Century could be crucial information in the 30th Century. An example of this problem is the definition of “uranium” in a late 19th Century dictionary as “a worthless white metal found in the tailings of gold mines.” Discarding information simply because we in this century think that it is meaningless is probably not a good option.

The practical corollary to this problem is how to store this material for future use in the face of digital storage programs that may change every few years? It is clear that the very definition of “fact” has been changed by the evolution of the technology that “creates” the information at hand. For example, prior to the middle of the 20th Century, data generally was maintained in a “hard copy” form of some sort, such as paper, metal engravings, paintings, or even stone. At the American Association for the Advancement of Science in 2015, the vice president and chief internet advocate of Google, Vinton G. Cerf, one of the co-creators of the TCP/IP protocol, spoke in favor of the creation of “digital vellum.”[14] By this he advocated a system that would be capable of preserving the meaning of the digital records that we create and make them retrievable over periods of hundreds or thousands of years. The obvious analog to this is the Rosetta Stone, and it should be well within the ability of our society to create such a mechanism.

While there is no question that the “digital vellum” concept has merit, there is an additional issue that makes the “memory hole” even more of an existential threat. This is the growth of functional illiteracy in civilization in general. Professor Mihai Nadin of The University of Texas at Dallas has addressed this to some extent in his book The Civilization of Illiteracy.[15] He asserts that while literacy was essential to the development of an efficient society, it is no longer adequate to sustain that efficiency. It is too slow, too ambiguous, and too subjective to be efficient. The result is that new languages, more efficient ones, are coming into the world, some driven by, or even created by, machine-created programs.

On the broader view of society, this would of necessity involve attention to translational issues because the words used in earlier records might or might not reflect accurately what is meant today by the same word.[16] Left to its own devices, this process of remotion could gradually re-standardize the societal view of the world, leading to a sort of collective unknowing that arises from a tenebra ignorantiae (shadow of

ignorance).[17] The translational problem is easily illustrated in the modern world where the word “love” in English has more than thirty translations in Greek, each of which is a nuanced version of the concept. Which one will be selected to be included in the “digital vellum” is a major issue for consideration.

Unfortunately, as has been noted elsewhere, the problem of “Big Data” clouds the ability of the individual researcher to remain focused. The reason for this is quite simple. Not only is the sheer volume of data beyond the ability of an individual to analyze with any assurance of accuracy. The problem is compounded by a shortage, if not absence, of metadata that could otherwise be helpful in validating the data in its present form. After all, when data is created and then modified, the trail of modification is known as metadata. Even if a given version does not make it into the final draft, the metadata allows a researcher to track what had existed in prior versions. This can be important in that, if an error has crept into the development of a particular project, the metadata trail may make it possible to find out where it happened and how bad the error really was.

In the world of the attorney, the metadata would include various drafts of contractual documents, wills, trusts, or even pleadings. Similarly, an attorney would need to be able to review critically the metadata underlying expert testimony that is going to be offered in court. Without the awareness of the need for that critical approach, there is a real danger of misleading the court as to admissibility vel non. Put another way, the principal flaw in “Big Data” is that incomplete or erroneous data at the front end will likely lead to erroneous conclusions, and there is no amount of technology that can overcome that.

Indeed, it may be that because the volume of information available in the modern world cannot be managed by the technology that currently exists, the “Turing limit” simply cannot be avoided. The researcher must depend upon the ability of the machine to sort through the information to find what is needed in order to reach an “expert” opinion, and that will depend upon the keywords chosen by the researcher, not the machine. Such choices inevitably introduce another layer of implicit bias in the reliability of what the machine can and cannot do for the researcher. Similarly, modern technology allows for the manipulation of the data that is available to the extent of “fudging” the numbers to get a result that fits the model of the experiment at hand. Again, the lack of metadata makes these fake “facts” difficult to challenge and potentially creates a very bad outcome in the world of research or in court.

A corollary that is very sensitive, of course, is the ability of a person to fabricate a persona that portrays him or her as an “expert” with a résumé that is false, but difficult to detect as to its falsity. An example of this was an “expert” psychologist who testified in numerous cases as to the mental conditions of one of the parties. The “credentials” included a Ph.D. from a well-known university. Normally, this would be accepted at face value, but a determined opposing attorney contacted the university registrar to verify the degree. The registrar replied that there was no such record of the person in question. At trial, this was revealed, and the person was prosecuted for the prior perjured testimony. Of course, the cases in which he had testified were either overturned for a new trial or thrown out. The ability to falsify credentials in the 21st Century is even easier, because of photoshopping and other means of counterfeiting records with little or no metadata trail to show what was done.

For example, artificial intelligence (AI)-generated synthetic video, text, or audio demonstrative evidence, however, is also subject to deliberate manipulation. The more sophisticated ones are known generally as “deepfakes”, and the amateurish ones are “cheapfakes”. The key concept, though is “-fake”. The problem for the courts in such a presentation is how to determine the fundamental integrity of the presentation that is being offered. With the technical advances in the production of deepfakes, however, this will become increasingly difficult, though it could produce an entirely new industry for experts who can uncover the fakes and validate the genuine. Juridically, this would be akin to determining genuineness of an alleged forged signature. Yet, should not judges need to be aware of the issue, lest technology that is flawed, but undetectable, erode confidence in the integrity of the judicial process?[18] Indeed, in such a case the trier of fact is faced directly with the “Turing limit” as applied to admissibility of evidence.

The reality of the 21st Century is that most persons under the age of forty have grown up in a world that does not know a world without personal computers, whether desktop or smartphone (yes, they are computers). The advent and rapid innovation of such technologies makes it easy for human beings to compartmentalize their existence and, thus, dissociate from their fellows. The dissociation phenomenon ironically makes the actions of the group seen as more significant than those of the individual. This can create a subtle isolation of individual human initiative as giving rise to “elitism” on a social level that may be discouraged. The shift of focus away from people to increased dependence upon machines mimics much of the world in which humanity now finds itself. As Tacitus once said, “Because they didn’t know better, they called it ‘civilization,’ when it was part of their slavery [idque apud imperitos humanitas vocabatur, cum pars servitutis esset]”[19]. If this thought process should be combined with the problems inherent in “Big Data”, then the ability of society and its “experts” to rely on their analyses is potentially badly flawed.

Similarly, the dissociation among individuals tends to isolate them from “how the world works” in relation to technology. In other words, just as people compartmentalize themselves from their fellows, so they tend to compartmentalize their humanity and separate it from machines that are part of their environment. To that extent, the potential and growing “enslavement” of the judicial process to technological capability is something to which lawyers and judges need to be sensitive. In the use of such evidence, it is imperative that the qualifications of the person who will be testifying, the “expert”, be clear and unequivocal. Ironically, when dealing in the legal context, whether the evidence is related to expert testimony or technology, technoevidence may well be one of the most frequently cited benefits of the modern technology.

In this context, reference to self-driving automobiles provides a useful example. When such a vehicle crashes, injuring the driver, the legal question becomes “who is responsible?” Initially, the standard approach is to examine the driver as to his or her condition at the time. Was the driver drunk, asleep, experiencing a physical emergency? Each of these questions must be answered to bring that part of the “big picture” into some level of focus. Beyond that is the nature of the vehicle and its technology. For example, was the autopilot engaged or not; did the airbags deploy properly or not at all [this has been a problem in recent years]; how fast was the vehicle traveling; what is the level of technology that would have kept the vehicle on the road and did it alert the driver? The answers to these questions clearly point to the developer of the software and the design of the vehicle that incorporated this technology. Such an analysis could well follow traditional lines of liability relative to the putting of such a device into the stream of commerce without adequate testing. If the software were of a sufficiently new type, then the issue would be what the industry standards for design and testing might be.[20] If they had been exceeded by the new technology, then the manufacturer might not be held liable, but the jury question would remain.[21]

In the United States, the general rule is that the proffered “expert” evidence must be examined by the judge, both as to methodology and as to the qualifications of the testifying witness before it can be considered.[22] This “gatekeeper” function vested in the judiciary places the judge in a rather interesting position in relation to the scientist. After all, in most instances, the judge is the product of an undergraduate liberal arts educational background that is reinforced in law school by virtue of the extensive reading that is customarily required. When confronted with evidence grounded in the sciences, the judge must now evaluate on a scientific level the quality of the information.[23]

At the threshold of the inquiry, the “Turing limit” is in play in the process of determining what evidence will be allowed. By definition, this process moves from the “big picture” of what the expert might offer through the “micro”, sometimes to the “nano” of inquiry. In effect, it takes the listener through Fig. 1 in reverse, i.e. from the upper right to the lower left. Of necessity, the “observer effect” is now the product of what the judge sees, not merely what the lawyers might have argued. At each point in the testimony of the expert, there would have to be some explanation as to what the technology shows the situation to be. In other words, “What does the machine say is the truth”? Put another way, does the expert testimony fail the “Turing test”? The result is a “trajectory” along which the evidence can be driven by technology as required.

One of the major characteristics of the traditional Anglo-American judicial process is the “adversary system” that places parties and their lawyers in opposition in a given lawsuit. The party who has brought the suit, generally known as the plaintiff, has the responsibility to introduce evidence to support the claim that is asserted. This is called the “burden of persuasion” because in order to win, the plaintiff must persuade the judge or jury that its version of the “facts” is correct with evidence. The defendant, obviously, has the duty, or burden, to respond to the plaintiff with evidence to negate the claim or establish a justification for its actions. In the realm of technoevidence as it evolves in the future, this burden will likely be compounded by the presentation of competing “experts”. The accompanying competing information and technology, become part of the methodology which the judge must evaluate and rule upon in real time, as to its accuracy or relevance to the case at hand.

“Persuasion” in this situation would require lawyers to have dived deep into the metadata employed by their “expert” and have it either peer reviewed or generally in use throughout the professional world of which the expert is a part. Anything short of that probably would not pass muster with a judge whose background is not highly technical to begin with. Indeed, there simply are not law schools that are equipped to deal with such an issue at the present time. . .but perhaps there should be.

Whether or not it is made explicit to the judge and jury, the testimony in a technoevidentiary presentation is going to be subject to the “Turing limit” if only because it is not possible to cross-examine the machine. In a criminal case, that is of major importance because of the Sixth Amendment right to confront the “witness”. That said, the “Turing limit” because of the relationship of the machine to the expert, will likely become explicit upon cross-examination of an expert. In that process, the limitations of the machine and the operator will also become apparent to the trier of fact. By definition, the human testimony is, therefore, subject to the limitations of the technology.

For example, in the case of medical testimony regarding the contents of the blood of a deceased person, care must be shown to insure that the capability of the machine is broad enough to include the maximum of variables, rather than simply to test for the presence of one or two items. Even so, the reality is that the test likely will uncover only what it is designed to reveal and not anything else. Again, the implicit bias of the machine impacts the reliability of the evidence. Put another way, “[T]he field of artificial intelligence has been very successful in developing artificial systems that perform these tasks without featuring intelligence.”[24] Indeed, a properly designed piece of test equipment would be capable of bringing many factors into the discussion, leaving the judge to decide which ones were “relevant” to the case. In such a situation, it probably would be much better to have too much information than not enough to reach a valid decision. It would then be up to the lawyers to persuade the jury as to which factors were essential to their finding of “facts”.

An example of this problem and the technological and judicial evolution that it has impacted is the use of DNA evidence in criminal cases. From the discovery in 1953 of its double helix structure, the utility of DNA as a means of identification expanded through the remainder of the 20th Century. Criminal law kept pace with the technology in that defendants in rape cases particularly were subject to being identified through DNA left behind after the attack.

The number of men so convicted is not known for sure. What is known is that, as the technology for mapping the DNA improved, samples kept from many years earlier were re-examined and found not to belong to the person who was convicted. So scientifically and factually accurate was the DNA evidence thus adduced that by the end of the 20th Century many prisoners were exonerated and released by the advancement of technology in this area. For jurors and judges, likewise, the conclusiveness of DNA evidence became so pervasive that without it, the ability of the state to secure a conviction has been and is often severely crippled. This was in part a function of a television program dealing with violent crime known as “CSI”, and the impact was “the CSI effect” that led to the “no DNA” acquittals.

In such a situation, the “Turing limit” itself is, in effect, “sidelined” by information that may have become societally embedded (and not formally in evidence) to the point that technoevidence may have little effect on the outcome of a trial. This shift in viewpoint on the part of the jury demonstrates the result in Wigner’s paradox of the collapse of a universe of “facts” into one point that makes all others of little or no importance. As a result, the “Turing limit” may be evident in the unwillingness of juries to accept unquestioningly the “expert” assessments offered in court.

Indeed, the more complex those assessments might be, the less likely a trier of fact will pay attention to them. This is especially true when the topic is simply numbers, whether it be a chart or a calculation. No matter the credentials of the expert witness nor the precision of the machine that is being “introduced” to the trier of fact, the evidence simply may be ignored. . .and the law will allow it. This is because of the deference given to the verdict of the jury, particularly in the United States as a matter of public policy. This policy is expressed in the Sixth and Seventh Amendments to the Constitution of the United States, in which the right to a trial by jury is guaranteed in both civil and criminal cases. Indeed, the right to a trial by jury is the only one mentioned twice in the Bill of Rights.

This societal “sidelining” is an interesting phenomenon because it is not necessarily a matter of the evidence, but may be rooted more deeply in societal behavior. Just as dissociation occurs among people, so there is a growing distrust of machines by people if trusting them is going to limit individual liberty in movement or other activity. It may well be that, just at the time when technoevidence is more needed than ever in an increasingly complex society, it will in fact be less persuasive to that society that it is intended to benefit. If so, then judges and lawyers who are looking to depend upon technoevidence as crucial to a judicial outcome may be ignoring the “Turing limit” in favor of reaching an outcome, no matter how that outcome might conflict with the reality of human needs. Such an attitude, while in harmony with the notion of precision in the quality of evidence, may well be at odds with the values that underlie the judicial process. Indeed, the “Turing limit” could be the dividing line in the judicial process between judges who experience the law and those who become bogged down in technique. ♦

Copyright 2020 © John McClellan Marshall

All Rights Reserved

First published in Journal of AI and Society, 2021, DOI 10.1007/s00146-020-01139-z

John is a graduate of Virginia Military Institute, Vanderbilt University, and Southern Methodist University School of Law. His judicial career spans 44 years and includes civil and family law cases. He is a member of the Texas Bar College and the International Academy of Astronautics. He serves as a professor of law at UMCS in Poland and was recently elected as an Honorary Member of the Polish Judges Association “Iustitia.” John is married with two daughters.

John is a graduate of Virginia Military Institute, Vanderbilt University, and Southern Methodist University School of Law. His judicial career spans 44 years and includes civil and family law cases. He is a member of the Texas Bar College and the International Academy of Astronautics. He serves as a professor of law at UMCS in Poland and was recently elected as an Honorary Member of the Polish Judges Association “Iustitia.” John is married with two daughters.

Texas Bar College 30 Year Fellow, Joined 1991

Read More

WHITE WHALE, BLACK SWAN

“Once men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them… what the O.C. Bible should’ve said is: ‘Thou shalt not make a machine to counterfeit a human mind.” Frank Herbert, The Illustrated Dune (New York: Berkley Windhover, 1978), p. 12.

A Year of Texas Force Majeure: From Coronavirus to Snowpocalypse

From avoiding or enforcing large hotel contracts to multi-million dollar energy disputes, the law of force majeure has seen a major surge in the last year.

[1]The error bar shows error or uncertainty in a reported measurement, sometimes represented as a “plus or minus” number. It compensates for mechanical variations in the capabilities of machines and allows the analysis to be “generally accurate.” It is sometimes graphically presented.

[2]This is particularly true in the case of tests to determine intoxication while operating motor vehicles.

[3]The concept of “thinking machines” was initially put forward by Alan M. Turing in his paper Intelligent Machinery in 1948.

[4]The Turing test, was originally called the imitation game by Alan Turing in 1950. The test results do not depend on the machine’s ability to give correct answers to questions, only how closely its answers resemble those a human would give. The test was introduced by Turing in his 1950 paper, “Computing Machinery and Intelligence“, while working at the University of Manchester (Turing, 1950; p. 460).

[5]See F. Chollet, “On the Measure of Intelligence”, arXiv:1911.01547v2 Cornell University (2019) for a discussion of the two principal intelligence defining constructs in the 21st Century.

[6] This situation is illustrated in the motion picture Ex Machina. The ultimate union of man and machine in an AI scenario was depicted in Star Trek: The Motion Picture, which of course, saved the Earth from destruction. Cf. Lady Ada Lovelace’s Objection, as noted in her memoir on Babbage’s Analytical Engine, 1842.

[7] Anaïs Nin, Seduction of the Minotaur, Vol. 5 of Cities of the Interior (1959).

[8] This is known as “Wigner’s paradox.” See Musser, “Paradox Puts Objectivity On Shaky Footing,” Science, 889 (August 2020).

[9] Holmes, The Common Law, Boston, 1881, at 1. See also

Marshall, “Examining Judicial Decision-Making: An Axiological Analytical Tool,” 29 Studia Iuridica Lublinensia 3, 55-65 Summer 2020.

[10] This concept was originally articulated by the author in the paper “The Terminator Missed a Chip!: Cyberethics”, presented at the International Astronautical Congress of

1995, Oslo and originally published by the American Institute of Aeronauticsand Astronautics, Inc. with permission. Released to IAF/AIAA to publish in all forms. The corollary is the ability of technology to drive alterations in those conventions without regard to human input in a societal “default” to the machines.

[11] Guardans and Czeglédy, “Oriented Flows: The Molecular Biology and Political Economy of the Stew,” 42 Leonardo 2 (2009), 145.

[12] Boorstin, Daniel J., Cleopatra’s Nose: Essays on the Unexpected, (New York: Random House, 1994).

[13] J. Engebretson, “Data, Data, Everywhere”, Baylor Arts and Sciences (Fall 2018), 24.

[14] The abstract of this presentation can be found at: https://aaas.confex.com/aaas/2015/webprogram/Paper14064.html. The entire text is not available.

[15] Dresden University Press, 1997.

[16] See Marshall, “The Modern Memory Hole: Cyberethics Unchained,” 3 Athenaeum Review, 94-101 (October 2019) for an extended discussion of the problems of data retention.

[17] See Aquinas, Commentum in Quatuor Libros Sententiarum, I, VII, 1,1. Parma Edition.

[18] See M. Reynolds, “Courts and lawyers struggle with growing prevalence of deepfakes”, ABA Journal (Trial and Litigation), June 9, 2020. See also, the Star Trek episode “Court Martial” for the problem faced by a court when presented with manipulated computer-generated evidence.

[19] Agricola (98), Book 1, paragraph 21.

[20]For a discussion of the practical aspects of the application of AI in a conventional legal environment focusing on the search capabilities and electronic discovery pitfalls that are presented, see Miller, “Benefits of Artificial Intelligence: What Have You Done For Me Lately?”, available at https://legal.thomsonreuters.com/en/insights/articles.

[21] See the discussion in Su, Pascal, “Who to Blame in an Autonomous Vehicle Crash?”, Mensa Bulletin, July 2020.

[22] Daubert v. Merrell Dow Pharmaceuticals, Inc., 509 U.S. 579, 113 S. Ct. 2786, 125 L. Ed. 2d 469 (1993).

[23]See Allen, ed. “Artificial Intelligence in Our Legal System”, 59 No. 1 The Judges’ Journal (ABA: February 2020) for a discussion of both the immediate and potential impacts of AI on the judicial process, leading to the conclusion that, while the potential impact in enormous, the problems of contact between the judicial process and the “AI Ecosystem” have yet to be measured.

[24]See José Hernández-Orallo, “Evaluation in artificial intelligence: from task-oriented to ability-oriented measurement”, Artificial Intelligence Review, 397–447 (2017).